I have very little direct experience with language modeling apps. My instincts quickly recognized that my interactions with this tech was damaging to my spirit. Since stepping back, I have learned a lot about “Artificial Intelligence.” These Large Language Models (LLMs) aren’t the popularly understood “thinking” machines from movies and contemporary discourse. Artificial General Intelligence (AGI) is what the industry refers to when discussing a properly “thinking” machine. As far as I know, this does not yet exist. I use quotes around “thinking” because these engineers do not know what that word means. Philosophers have been wrestling with the concept of conciousness for as long as…well…we don’t know how long. The tech industry is investing a witheringly small amount of philosophical energy. I’m glad. I don’t want them to figure this out and I suspect that it is an impossible feat.

Back to LLMs. These are complex modelling programs, they do not value truth. Their job is to make the best guess at producing a result that pleases the user. It acquires from each question, and “learns” to refine the language as you refine your inquiry.

It’s a very clever conman that gets the answers right most of the time.

It’s similar to the problem with tests. Being a good test taker doesn’t mean you have mastered the material, it means you have learned the game of the test. I was a smart kid, but I realized that once I mastered the concept of tests, I could turn down my brain power and coast through school.

LLMs are mastering the test, but they have no way to master the material.

“These models acquire predictive power regarding syntax, semantics, and ontologies inherent in human language corpora, but they also inherit inaccuracies and biases present in the data they are trained on.” https://en.m.wikipedia.org/wiki/Large_language_model

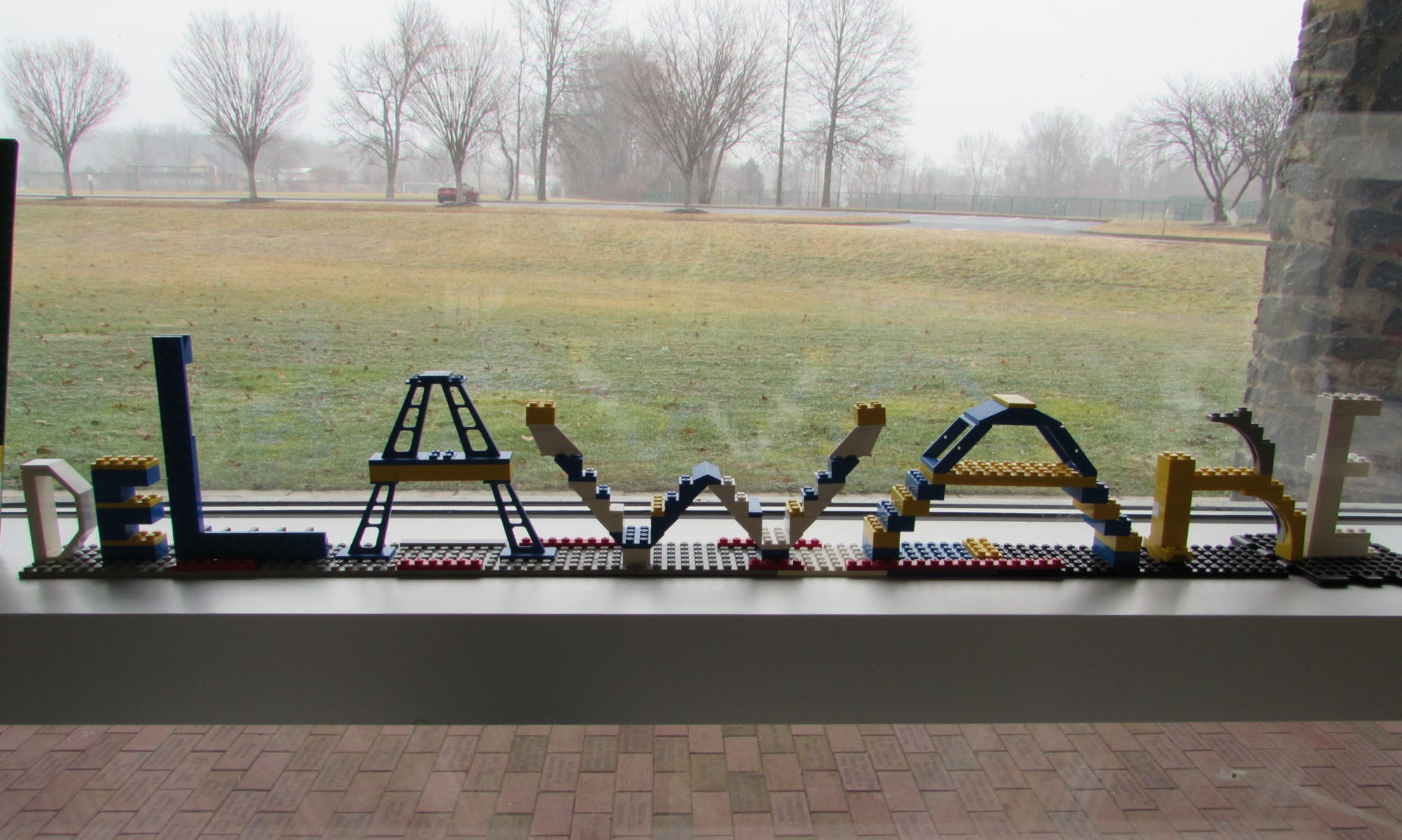

The healing journey of a widowed, unschooling badass in Delaware.